We’ve noticed you’re visiting from NZ. Click here to visit our NZS site.

What we do

Get clear, actionable insights through comprehensive data analytics that prove your impact and guide smart decisions. We help you measure what matters through tailored evaluations, literature reviews and thematic analysis that go beyond just collecting data.

By combining rigorous methodologies with practical expertise, including detailed case study approaches, we deliver evidence and recommendations you can use to enhance performance and demonstrate value to stakeholders.

-

-

-

Mixed-method Evaluations

We undertake complex mixed methods research and evaluations with robust qualitative and quantitative evaluation and research methodologies to understand impact and support decision-making.

Our experts create bespoke data collection tools and our thematic analysis generates actionable insights and recommendations.

-

Document review - provides an independent assessment of the literature through structured qualitative research methods.

-

Stakeholder Engagement - including in-depth focus group discussions, interviews and workshops to understand how initiatives have been implemented and their impact.

-

Data Analytics - we use comprehensive quantitative research design to provide information on how your initiative is performing and identify future opportunities.

Monitoring + Evaluation Frameworks

Allen + Clarke has a depth of experience developing evaluation frameworks utilising global research and data analytics for large scale and complex policies and programs.

We work collaboratively with you to develop bespoke monitoring frameworks that deliver meaningful data insights to measure your impact.

-

Theory of change - this is a key component to our research methodology. We develop a model of what the initiative with its inputs and activities contributes to changes over time, forming the foundation of your evaluation framework.

-

Program Logic - Through targeted qualitative research, we develop a diagrammatic model showing the connection between the initiative and its anticipated impacts in the short, medium and long term.

-

Monitoring + Measurement - we develop key evaluation questions, performance indicators and quantitative research tools to generate valuable data insights and strengthen your evaluation framework.

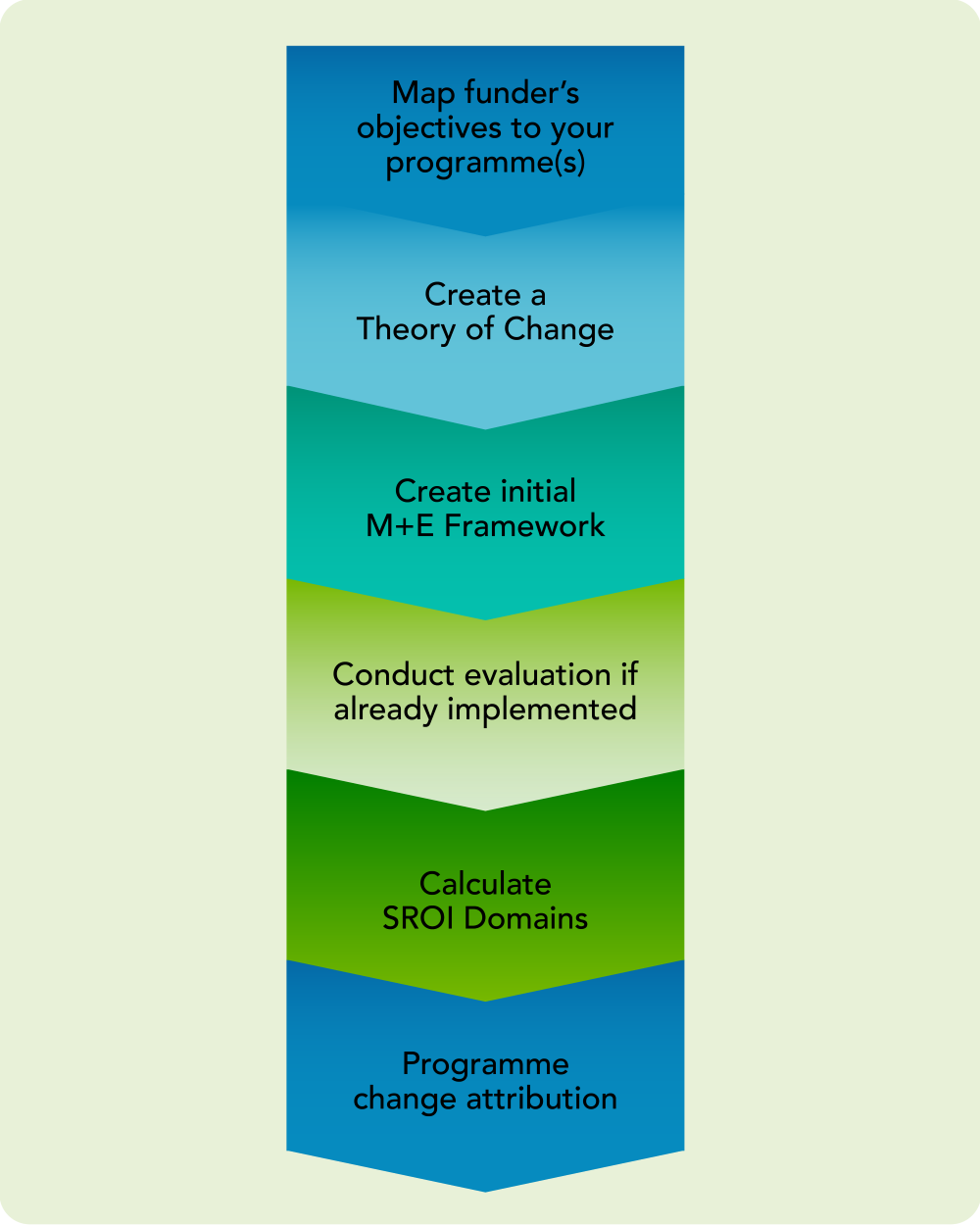

Lapsing Funding Evaluations

We undertake comprehensive independent evaluations of initiatives which meet the Department of Treasury and Finance’s Performance Measurement Framework requirements for lapsing programs.

By understanding the social and financial impact of your program, we help justify investment in initiatives that drive meaningful change

-

Theory of Change - developing a program logic model of why the initiative, with its inputs and activities contributes to changes over time.

-

Mixed methods evaluations - utilising robust qualitative and quantitative research methodologies to understand how your initiative has had impact and identify opportunities for growth.

-

Address key questions - on the justification, effectiveness, delivery and efficiency of the program, and the risk of ceasing program funding to support future budget bids.

Related Projects

National Standards for counsellors and psychotherapists

Read More

Supporting aged care providers deliver inclusive services: Evaluating OPAN's Education Initiative

Read More

Robust Regulation – Designing interventions with lasting impact

Read MoreReviews

We provide a range of review services utilising mixed methods research, enhanced by data analytics to provide credibility, assurance, evidence and support for future decision-making.

Our reviews are tailored to the decisions you face.

-

Desktop reviews - we conduct thorough qualitative research to deliver independent assessments of your operating model, supported by robust documentary evidence.

-

Stakeholder Engagement - via qualitative and quantitative research methods, including interviews, focus groups, workshops, case study and surveys to receive a range of feedback on performance or processes.

-

Literature Reviews - comprehensive literature reviews and data analytics of peer review and grey material to build a robust evidence base supporting decision-making.

Our Process

Understand

We dig deeper to reframe challenges and uncover what truly matters.

Plan

We create tailored blueprints that save time and drive success.

Deliver

We communicate transparently while managing risk with empathy and agility.

Review

We test findings with stakeholders to eliminate surprises and gain insights.

Close

We capture lessons and celebrate achievements to continuously improve.

Frequently Asked Questions

Can you evaluate initiatives that don't have clear objectives or baseline data?

Yes, we often evaluate initiatives that don’t have clear objectives or baseline data. In those cases, we would begin by developing a theory of change or identifying appropriate indicators to ensure that we are asking the right questions. While baseline data is valuable, we can also conduct a meaningful evaluation using other methods such as contribution analysis.

How do you measure intangible outcomes like wellbeing or community resilience?

At the outset of an evaluation, we agree with you how we will measure intangible outcomes. We use qualitative methods to capture rich narratives about participant experiences and can use existing frameworks to assess holistic wellbeing. We also have experience using participatory techniques where communities define and assess their own success criteria. These approaches can provide meaningful insights beyond simple metrics.

How do you communicate complex evaluation findings to different audiences?

We can produce outputs which are tailored to different audiences’ needs and preferences. For example, a comprehensive technical report might be accompanied by a separate executive summary, visual quick-reference dashboards, presentation slides, or one-pagers. These can be tailored for different audiences such as technical specialists, decision-makers, interested stakeholders, or the general public. We can also deliver workshops or presentations to a range of audiences to help them engage with the findings.

Can you evaluate programs that operate across multiple sites or regions?

Yes, we regularly evaluate programs that operate over multiple sites or regions. Our team can travel to multiple sites either in New Zealand, Australia, or internationally as needed, or we can use digital tools to enable remote data collection.

Depending on the scope of the evaluation we can use a sampling approach to select representative sites, use comparative case studies to understand contextual differences, and use a mixture of methods to balance the depth and breadth of the evaluation data.